📈 The Top Stock in 2025

The S&P 500’s best performer wasn’t on your bingo card

Welcome to the Free edition of How They Make Money.

Over 260,000 subscribers turn to us for business and investment insights.

In case you missed it:

🎉 Welcome to 2026

New year, same obsession: How market leaders actually make money.

While the internet is busy making bold predictions and hot takes, we’re starting the year the only way we know how: By following the money. 💰

Today at a glance:

📈 Sandisk Absolutely Crushed 2025

🧠 Meta Bets on AI Startup Manus

🤖 NVIDIA + Groq: The $20B Deal

🔎 Gemini’s Very Good Year

📈 Sandisk Absolutely Crushed 2025

If you spent 2025 watching only the Mag 7, you missed one of the big stories of the year. The best-performing stock in the S&P 500 wasn’t a hyper-scaler or an LLM designer. It was a company that didn’t even exist as an independent entity at the start of the year.

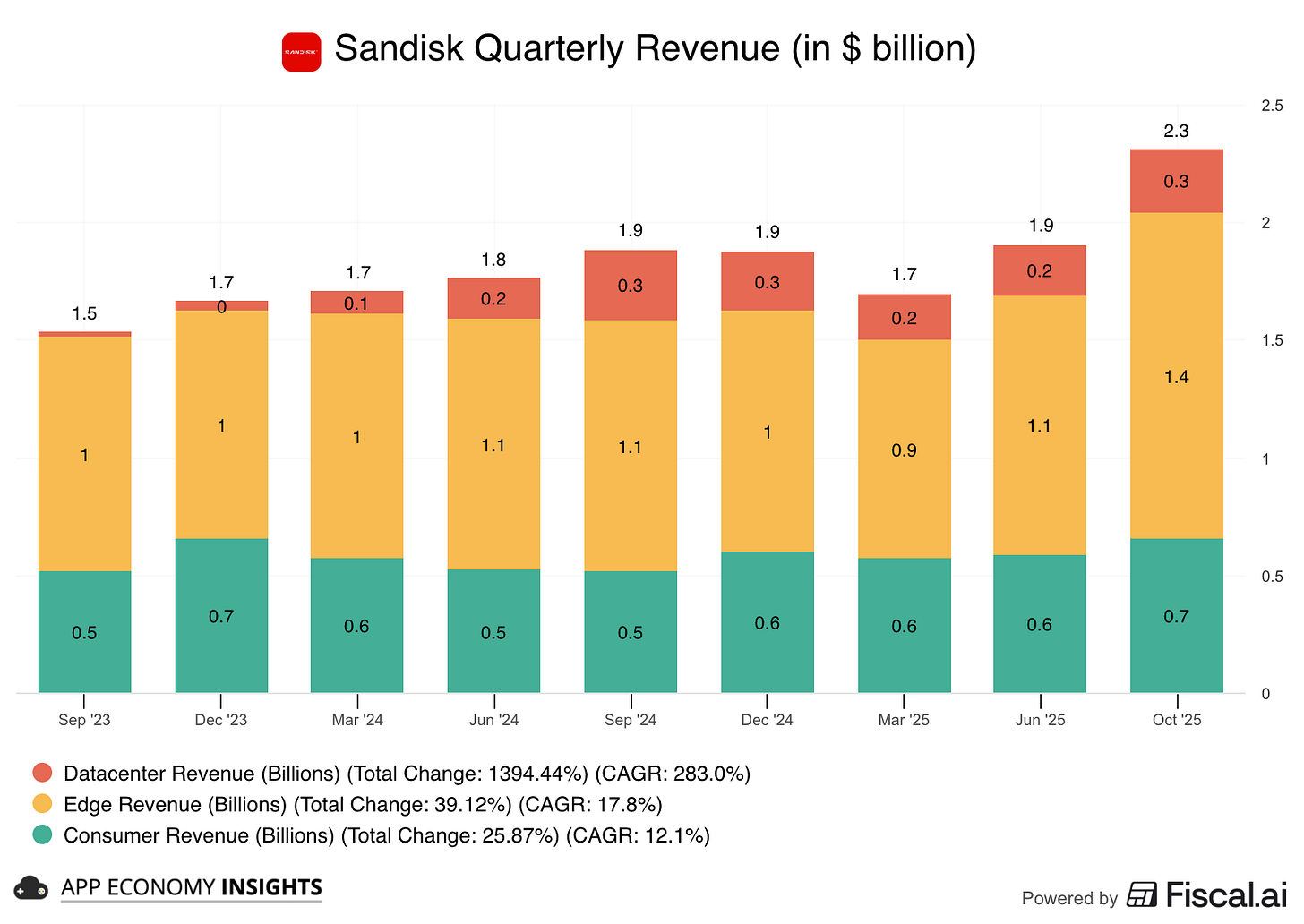

Sandisk (SNDK) delivered a staggering ~594% return since its spinoff from Western Digital (WDC) in February 2025.

For years, Western Digital’s stock was dragged down by a conglomerate discount, trying to run a legacy Hard Drive business and a high-growth Flash business under one roof. The spinoff finally freed Sandisk to be valued on its own merits. It became a pure-play on the next phase of the AI buildout.

But a nearly 7x surge in under a year requires more than just a corporate reshuffle. It requires a fundamental misunderstanding by the market of how a company makes money. That misunderstanding was amplified by years of NAND price cyclicality, which trained investors to treat every storage rally as temporary.

It’s not just USB drives

Many investors still associate Sandisk with the little SD card in their camera. While that Consumer segment still provides cash flow, it’s no longer the engine.

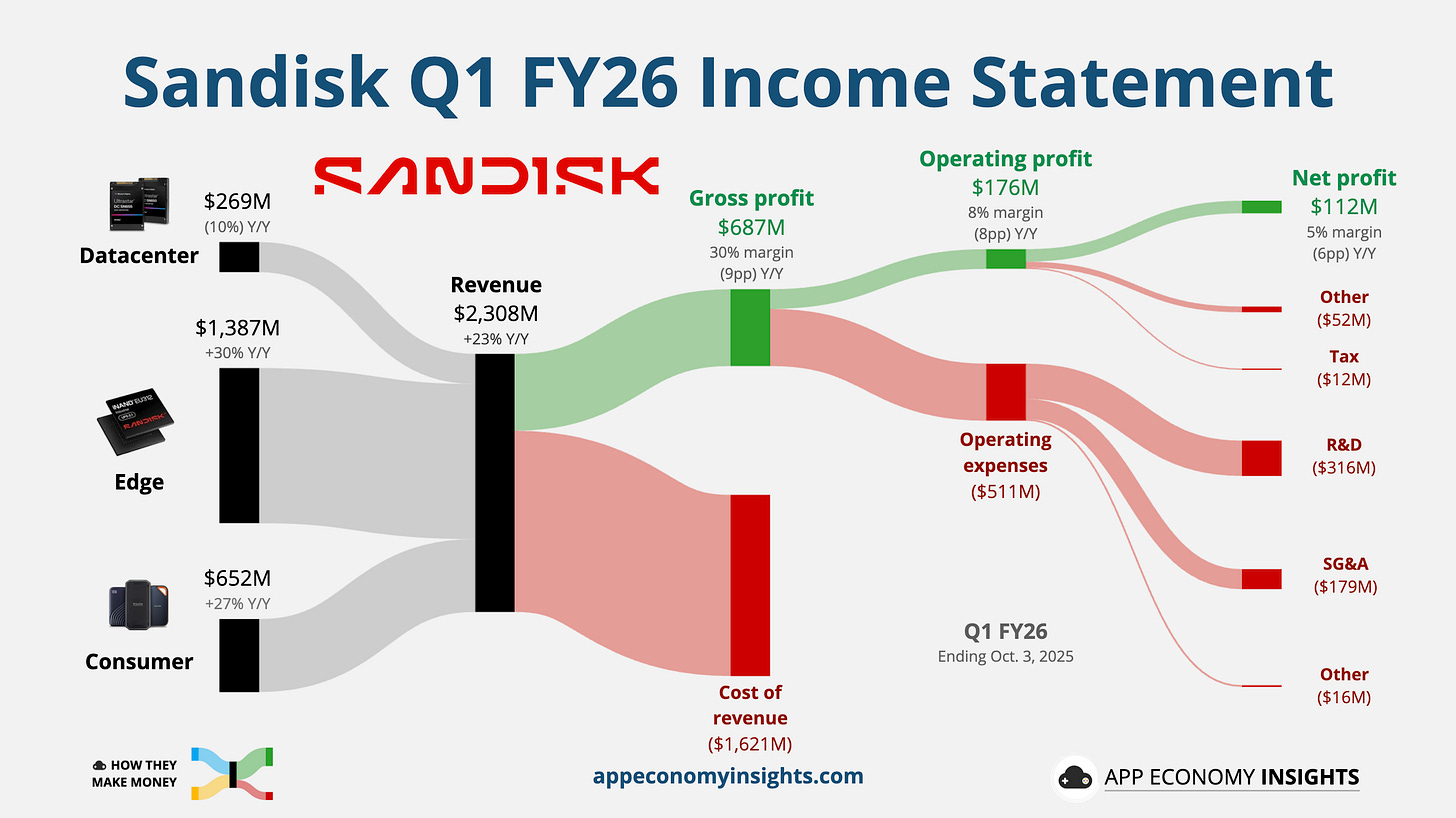

Sandisk makes NAND Flash memory, the essential technology for fast, non-volatile storage. Their revenue is now driven by three distinct pillars:

Datacenter: High-performance Enterprise SSDs for hyperscalers (like AWS and Google Cloud). These deployments carry longer contracts, higher switching costs, and disproportionate influence on valuation by turning volatile NAND pricing into infrastructure-like revenue streams critical to AI training workloads.

Edge: It covers the critical flash memory inside AI Smartphones, PCs, Internet of Things (IoT), and autonomous vehicles. As devices run more AI models locally (on-device AI), they need massive storage upgrades.

Consumer: The portable SSDs and memory cards we all know.

AI Multiple Valuation

Sandisk’s 2025 story was all about multiple expansion. The market stopped valuing Sandisk like a single-digit multiple NAND supplier and began pricing it closer to AI infrastructure peers with durable demand visibility.

In early 2025, the market valued Sandisk like a commodity hardware seller (a low P/E multiple). By mid-year, the narrative shifted. Investors realized that if AI is the new electricity, high-speed storage is the copper wire beneath it. Invisible, essential, and impossible to scale without.

Sandisk was “re-rated” from a boring hardware stock to critical AI infrastructure.

This re-rating was so powerful that the stock ripped higher even as gross margins compressed. Why? Investors recognized that Sandisk was executing a deliberate land grab, accepting near-term margin pressure as it ramps enterprise volumes and secures long-term hyperscaler relationships with data centers building out their AI architecture for the next decade.

What’s the moat? Sandisk’s advantage relies on the high switching costs of the "Qualification Cycle." In the AI era, hyperscalers cannot simply swap out storage components on a whim. Drives must undergo grueling 6-to-12-month testing regimens to ensure they don't cause latency spikes that idle expensive GPUs. Once Sandisk wins a socket and becomes the "Plan of Record," it locks in that revenue for the server's entire 3-to-5-year lifecycle. Furthermore, while competitors like Samsung are prioritizing HBM capacity for GPUs, Sandisk has capitalized on the less crowded lane of Enterprise SSDs, using specialized controllers and firmware to turn what used to be a commodity component into a sticky, essential layer of the AI infrastructure stack.

Takeaway: Spinoffs often unlock massive value by stripping away narrative noise and forcing markets to reprice the underlying economics. But more importantly, 2025 proved that the boring infrastructure layers of a tech boom can be far more lucrative than the flashy consumer apps built on top of them.

🧠 Meta Bets on AI Startup Manus

Meta Platforms just made another bold AI move, agreeing to acquire Manus for more than $2 billion, a deal reportedly agreed in just 10 days.

It might sound like a steep price, but Manus isn’t a typical chatbot. It’s a general-purpose AI agent designed to plan tasks, pull in tools, and deliver finished work. Think research reports, code, data analysis, or even websites, with minimal human input.

Manus was founded in China in 2022 and later moved to Singapore. It officially launched its agent in March 2025.

Key numbers

~100 Manus employees.

~$85 million raised so far.

~$125 million in ARR (crossed $100 million within 8 months of launch).

That puts Manus in rare territory for enterprise software adoption.

Meta plans to continue selling Manus as a standalone product while integrating its agent capabilities across Meta’s ecosystem: Facebook, Instagram, WhatsApp, ads, and AI hardware.

Why it matters

Meta has world-class models and massive distribution, but it has lagged peers like ChatGPT and Gemini in real, task-oriented AI applications. Manus fills that gap immediately.

Instead of waiting years to build an agent platform from scratch, Meta is buying:

A proven agent workflow.

Paying enterprise customers.

A subscription revenue stream.

A team already shipping in production.

It also gives Meta a path to meaningful AI revenue beyond ads.

Takeaway: This deal is all about monetization. Meta is using its balance sheet to shortcut the hardest part of AI: turning impressive technology into products businesses actually pay for. At $2 billion, Manus is expensive. But at $125 million in ARR in under a year, it gives Meta something rare in AI today: traction with economics attached.

🤖 NVIDIA + Groq: The $20B Deal

In a move that stunned Wall Street over the holiday break, NVIDIA announced a definitive agreement to pay $20 billion in cash to license the technology and hire the core engineering team of AI chip startup Groq.

This is not a traditional acquisition. Groq will continue to exist as an independent entity (renamed GroqCloud), led by former CFO Simon Edwards. However, NVIDIA is effectively absorbing Groq’s “soul”—its proprietary LPU (Language Processing Unit) patents and its visionary leadership, including founder Jonathan Ross (creator of the Google TPU).

Why now? To understand this deal, you have to look at the market shift that defined late 2025. We recently crossed a tipping point known as the Inference Flip. Global revenue from using AI models (inference) has officially surpassed the revenue from building them (training).

While NVIDIA’s GPUs are the undisputed kings of training (massive throughput), they have a vulnerability: Latency.

NVIDIA GPUs are like freight trains. Incredible capacity to haul massive data, but slow to get up to speed.

Groq LPUs are like Formula 1 cars. Lightweight, instant acceleration, and designed purely for real-time speed.

By securing Groq’s technology, NVIDIA solves its only real weakness: real-time, low-latency inference for agents and voice AI.

What it means:

Regulatory shenanigans: By structuring this as a “licensing and hiring” deal rather than a merger, NVIDIA sidesteps the antitrust gridlock that killed the ARM deal. It mirrors the strategy Microsoft used with Inflection AI earlier this year.

Capital efficiency: $20 billion sounds like a lot, but for NVIDIA, it represents just one quarter of Free Cash Flow. They essentially used three months of profit to neutralize a systemic threat and secure the next decade of inference dominance.

The moat widens: This deal deprives competitors (like Google and AMD) of some of the industry’s best inference talent. NVIDIA is no longer just selling shovels for the AI gold rush. It now owns the distribution network for the gold itself.

Takeaway: NVIDIA just signaled that it refuses to be disrupted from below. By integrating Groq’s speed into the upcoming Rubin architecture, NVIDIA ensures it remains the default operating system for the entire AI economy—from massive training runs to split-second inferencing.

🔎 Gemini’s Very Good Year

For most of 2024, the GenAI story looked simple: ChatGPT dominated, everyone else scrambled.

2025 quietly complicated that narrative.

Released on Christmas Day, new data from Similarweb tells one of the most important AI stories of the year:

Gemini is approaching a 20% share of GenAI website traffic

ChatGPT has slipped from 87% a year ago to below 70% today.

The shift comes down to distribution.

Gemini now sits inside Google’s core products, including Search, Chrome, Android, and Workspace. It’s turning default placement into habitual usage. When AI lives where people already spend their time, adoption follows naturally.

Two signals stand out from SimilarWeb’s data:

Grok continues to gain traction, helped by tight integration with X and real-time content.

The market is consolidating into a ‘Big Two’ dynamic, with ChatGPT and Gemini pulling away from a long tail of task-specific tools.

Gemini is the only product meaningfully taking share, and it’s doing so via Google distribution and defaults. As model quality converges, power users might show little loyalty to a single interface. They switch to whatever is cheapest, fastest, or already embedded in their workflow.

That challenges the idea of a lasting moat at the AI interface layer.

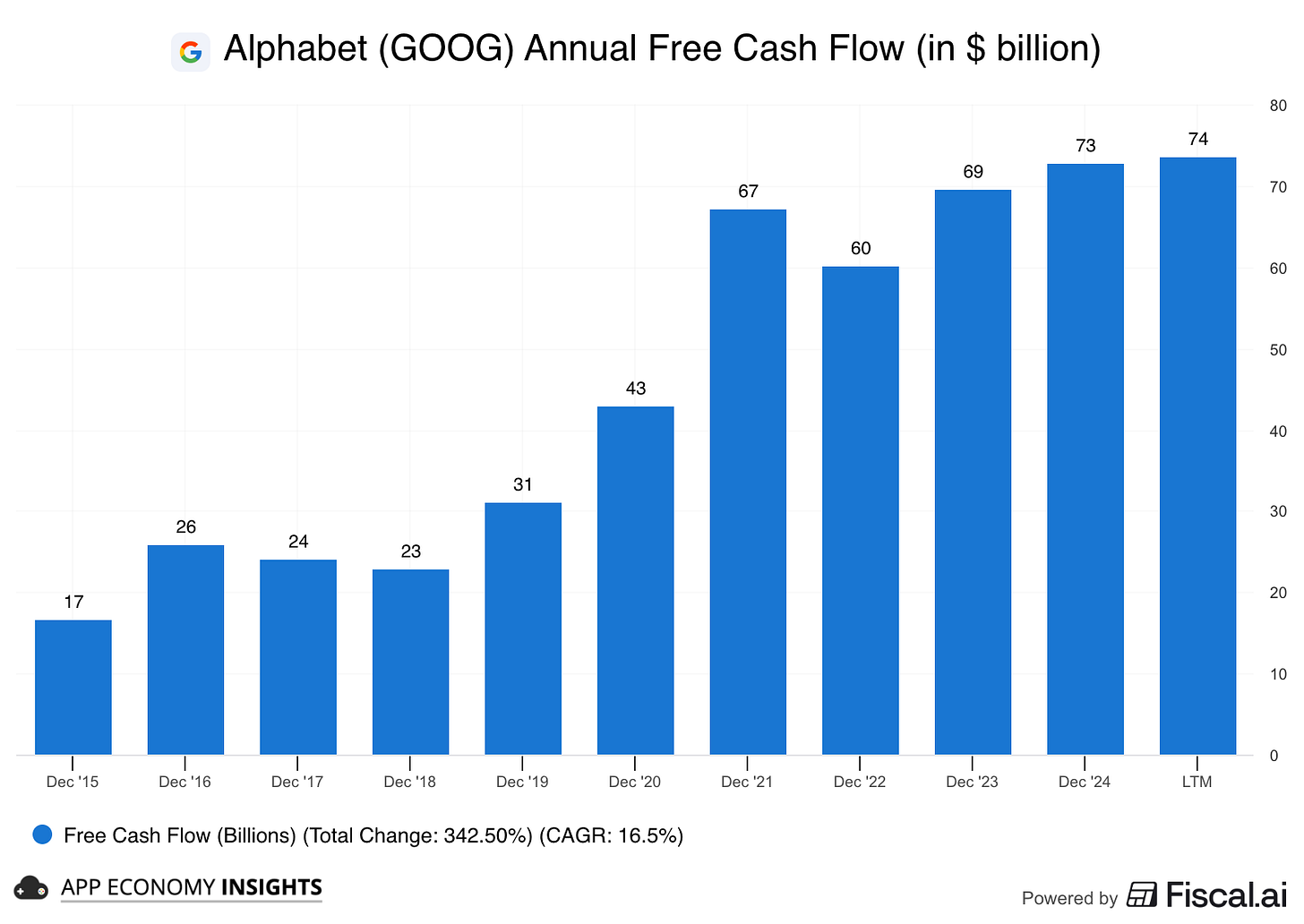

OpenAI doesn’t expect to be profitable until 2030 and, according to Deutsche Bank, could burn more than $140 billion in cumulative negative free cash flow before getting there. Google’s parent Alphabet, by contrast, generated $74 billion in free cash flow over the past 12 months, even after heavy AI investment.

That asymmetry matters.

Takeaway: ChatGPT built the category and forced Google to respond. Now Google is using its balance sheet, distribution, and pricing power to compete aggressively, while OpenAI is still figuring out its unit economics. The clock is ticking on whether ChatGPT can meaningfully pressure Search before Gemini closes the gap.

That's it for today.

Happy investing!

Want to sponsor this newsletter? Get in touch here.

Thanks to Fiscal.ai for being our official data partner. Create your own charts and pull key metrics from 50,000+ companies directly on Fiscal.ai. Save 15% with this link.

Disclosure: I own GOOG, META, and NVDA in App Economy Portfolio. I share my ratings (BUY, SELL, or HOLD) with App Economy Portfolio members.

Author's Note (Bertrand here 👋🏼): The views and opinions expressed in this newsletter are solely my own and should not be considered financial advice or any other organization's views.