☁️ Micron Rides the AI Boom

High-Bandwidth Memory is driving the up-cycle

Welcome to the Free edition of How They Make Money.

Over 200,000 subscribers turn to us for business and investment insights.

In case you missed it:

Every advanced piece of technology, from a supercomputer to a smartphone, is a marriage of two distinct functions: logic and memory.

🧠 Logic: These chips are the brains of devices, executing instructions and processing data, central to computers and smartphones.

🗃️ Memory: These chips focus on data storage, including DRAM for temporary storage and NAND for long-term retention.

While we recently discussed the new Intel x NVIDIA deal in the world of logic, AI demand is also creating a boom for memory.

Micron’s Q4 earnings just gave us the clearest signal yet.

Let’s review what we learned.

At a glance:

Micron’s new business units

The HBM-powered recovery

Key quotes from the earnings call

What to watch moving forward

1. Micron’s new business units

Micron is a US-based Integrated Device Manufacturer (IDM), like Intel, but focused on designing and manufacturing memory chips.

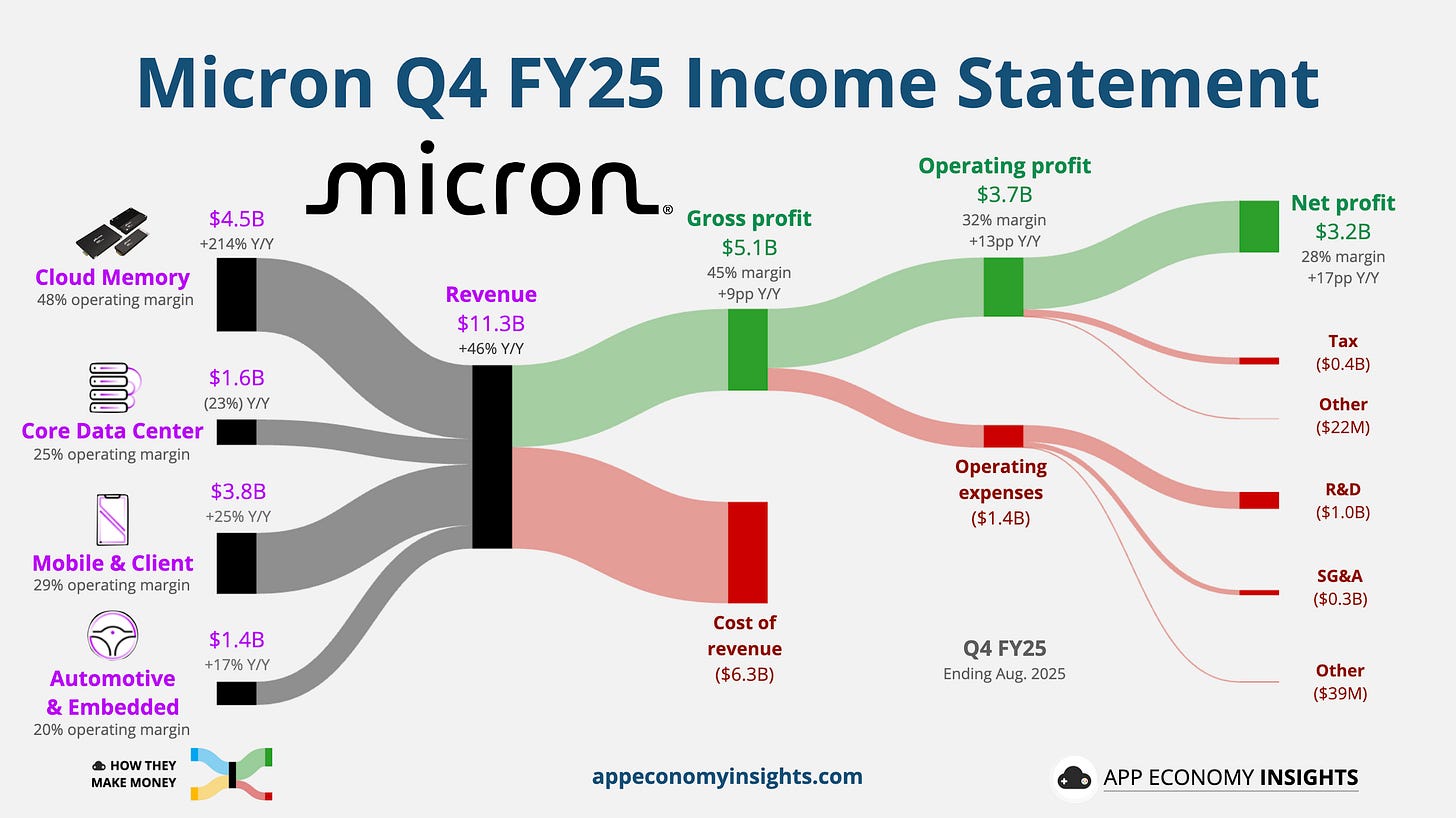

Starting with Q4 FY25 (August quarter), Micron reorganized its entire business into four business units that mirror how customers actually buy memory chips.

Cloud Memory: $4.5 billion in revenue (+214% Y/Y). This is the AI powerhouse. It serves giant cloud companies and all High-Bandwidth Memory (HBM) needs. It’s the highest-margin segment (48% operating margin). HBM-specific revenue grew to nearly $2 billion in Q4, implying an annual run rate of ~$8 billion.

What is HBM, anyway?

Think of it like building a memory skyscraper right next to the processor, instead of a sprawling, one-story warehouse far away. By stacking DRAM chips vertically, you get incredibly high bandwidth from a smaller footprint. That’s exactly what power-hungry AI chips need. The trade-off? It’s more complex to make and costs more per bit than standard DRAM.

Core Data Center: $1.6 billion in revenue (-23% Y/Y). This is the server workhorse: This unit sells memory and storage to traditional server makers (like Dell & HP).

Mobile and Client: $3.8 billion in revenue (+25% Y/Y). This unit provides the memory and storage for all our personal gadgets, like phones and PCs.

Automotive and Embedded: $1.4 billion in revenue (+17% Y/Y). This unit supplies chips for cars, industrial machines, and other consumer electronics.

This split is designed to make Micron’s business easier to understand. It makes the economics of the all-important AI memory (HBM) business more transparent and should reduce the “lumpiness” in their quarterly results.

The only catch with the new segmentation? Year-over-year comparisons will be a bit messy for a while. Keep an eye out for when Micron releases restated historical data to make sense of the new segments.

This new structure gives us a perfect lens to view the most important story in the memory market today: the dramatic AI-driven cycle.

Numbers at a glance:

Q4 FY25 (August quarter):

Revenue grew +46%Y/Y to $11.3 billion ($0.2 billion beat).

Gross margin was 45% (+9pp Y/Y).

Non-GAAP EPS $3.03 ($0.17 beat).

Q1 FY26 guidance (November quarter):

Revenue ~$12.5 billion ($0.7 billion beat).

Gross margin > 50%.

Non-GAAP EPS ~$3.75 ($0.71 beat).

Market reaction was timid. Micron’s stock (MU) has nearly doubled so far in 2025. Wall Street’s expectations were so high that even a great report was seen as a slight letdown. This highlights the frenzy and optimism already priced into the stock.

2. The HBM-powered recovery

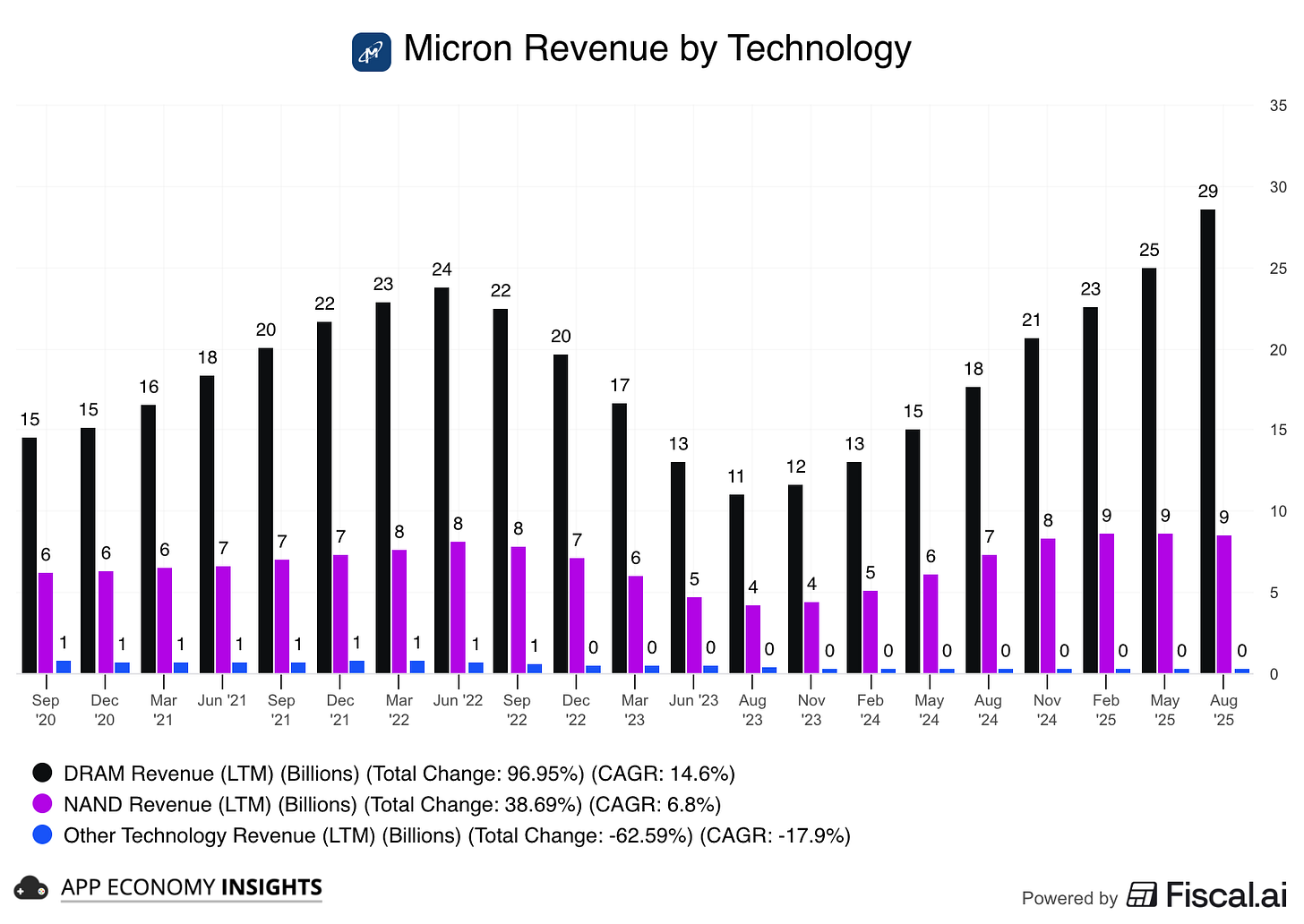

DRAM (including HBM) is the engine. LTM DRAM revenue fell from the 2022 peak, bottomed in mid-2023, and has re-accelerated to ~$29 billion LTM (see visual). That rebound is the HBM ramp plus DDR5 strength.

NAND is stabilizing. After a long slide, NAND has climbed back to ~$9 billion LTM, helped by enterprise SSD and early AI PC tailwinds.

Why it matters

As HBM mix rises, DRAM dollars rise disproportionately (higher ASPs).

NAND benefits from DDR5 and client SSD recovery, smoothing cyclicality.

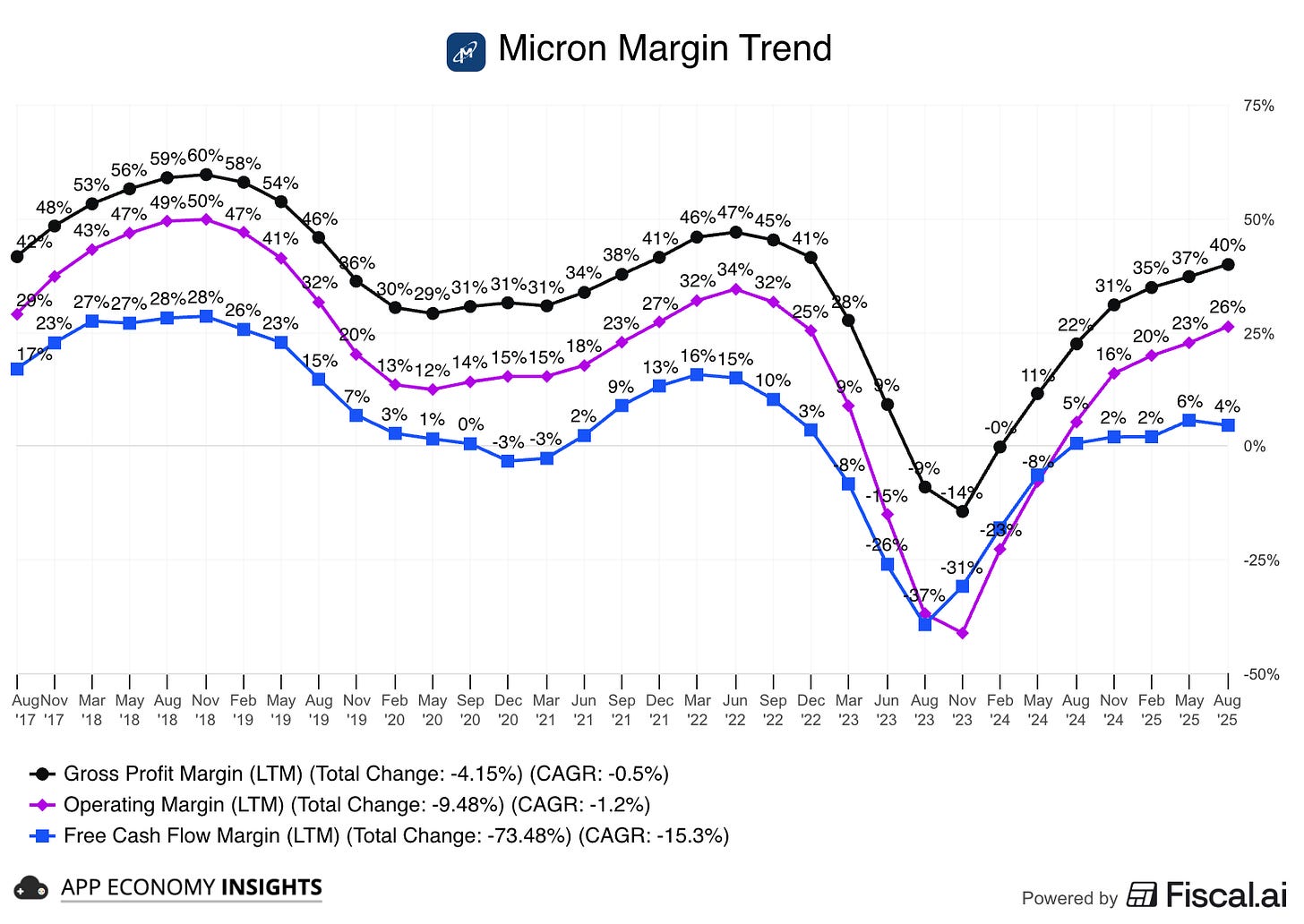

The memory up-cycle is here, led by HBM-driven DRAM. Micron’s new segment split reveals a clear path to higher profitability. As the mix shifts toward premium HBM products, it should raise the company’s gross margin ceiling, potentially breaking the boom-and-bust cycles of the past (see visual).

Micron’s print confirms memory remains the gating factor for AI systems in the near term. HBM inventory is tight, and gross margin is tracking to cross 50%.

3. Key quotes from the earnings call

Check out the post-call Q&A on Fiscal.ai here.

CEO Sanjay Mehrotra on momentum:

“The combined revenue from HBM, high-capacity DIMMs, and LP server DRAM reached $10B, more than 5× last year.”

AI data-center memory is now a franchise line, not an experiment. It’s lifting the entire data center market. It shows that the recovery is broad-based and adds another powerful (and somewhat unexpected) tailwind to the Micron story.

On competition:

“We are pleased to note that our HBM share is on track to grow again and be in line with our overall DRAM share in this calendar Q3, delivering on our targets that we have discussed for several quarters now.”

Micron and SK Hynix have gained a first-mover advantage against market leader Samsung by being faster to market with the latest generations of HBM.

“As the only US-based memory manufacturer, Micron is uniquely positioned to capitalize on the AI opportunity ahead.”

Supply-chain and policy angle can resonate with US hyperscalers.

On HBM customer concentration:

“Our HBM customer base has expanded and now includes six customers. We have pricing agreements with almost all customers for a vast majority of our HBM3E supply in calendar 2026. We are in active discussions with customers on the specifications and volumes for HBM4, and we expect to conclude agreements to sell out the remainder of our total HBM calendar 2026 supply in the coming months.”

Micron expanded its customer base from four in Q3 to six in Q4. And they are essentially sold out of their premium product more than a year in advance. It underscores the desperation of AI companies to secure memory supply. This is a real up-cycle, not a one-quarter blip.

CFO Mark Murphy on expectations:

“These supply-demand factors are there—we believe they’re durable. On the demand side, data center spend continues to increase. [...] On the supply side, customer inventory levels are healthy. Our supply is lean. Our DRAM inventories are below target.”

Murphy is making the case that strong profitability is set to continue, justifying the high expectations. However, the company notably declined to comment on whether HBM margins specifically would remain at today’s level.

4. What to watch moving forward

The memory up-cycle is in full swing, so here’s what to watch as the story unfolds.

Key metrics

The HBM engine: HBM revenue is already ~$8 billion run-rate. Watch bit growth vs ASPs each quarter to see whether volume or pricing is doing the lifting.

The customization play: Watch for more news on HBM4E (coming in 2027), the next generation of memory after HBM4. Management signaled they will offer customized versions in partnership with TSMC, which could unlock higher, more defensible margins in the long run.

Production & pricing: Keep an eye on packaging throughput (the main bottleneck for HBM supply) and NAND pricing. Any sign of wavering on production discipline could signal a return to old habits.

Capex signals: Micron plans for higher CapEx in FY26, which is a bullish sign for future demand and will be updated throughout the year.

Potential risks

Execution bar: Higher CapEx raises the need to hit yield/throughput targets for both HBM and non-HBM DRAM.

Supply & demand hiccups: Watch for any HBM yield and cleanroom capacity, as a lack of manufacturing space will define industry-wide supply.

Pricing & customer concentration: Wall Street expects a pricing step-down for HBM3e as a few big AI buyers lock in long-term agreements, though Micron says 2026 pricing is mostly locked.

The investor view

Ultimately, two clear narratives have emerged:

📈 Bull case: This is the first prolonged up-cycle since 2018, driven by broad-based strength. With HBM sold out, six customers, and the HBM4 ramp on the horizon, momentum could last for a while.

📉 Bear case: While the HBM story is strong, margin premiums may narrow next year. With the stock near peak valuation on key metrics, the risk/reward is less compelling.

The story is clear: a structural shift to high-margin HBM is underway. The data center business has shattered its old ceiling (growing from a third of overall revenue to more than half), proving that AI has reset the memory playbook.

This up-cycle could raise the industry’s margin floor and smooth future swings. But remember, cycles don’t vanish. In investing, the most dangerous words are “it’s different this time.”

That's it for today.

Happy investing!

Want to sponsor this newsletter? Get in touch here.

Thanks to Fiscal.ai for being our official data partner. Create your own charts and pull key metrics from 50,000+ companies directly on Fiscal.ai. Start an account for free and save 15% on paid plans with this link.

Disclosure: I own AMD, ASML, and NVDA in App Economy Portfolio. I share my ratings (BUY, SELL, or HOLD) with App Economy Portfolio members.

Author's Note (Bertrand here 👋🏼): The views and opinions expressed in this newsletter are solely my own and should not be considered financial advice or any other organization's views.

Excellent breakdown of Micron's HBM-driven recovery! Your observation about NAND stabilizing at ~$9B LTM 'helped by enterprise SSD and early AI PC tailwinds' is particularly important for understanding the broader memory ecosystem. While Micron dominates DRAM/HBM, the NAND side of this story is where Western Digital (along with Samsung, SK Hynix, and Kioxia) is positioned. WDC has been quietly benefiting from the same enterprise SSD recovery you mentioned - their recent earnings showed strengthening demand in the data center SSD space as AI infrastructure buildouts require massive amounts of fast storage alongside the HBM you discussed. The parallel is striking: just as HBM is sold out a year in advance with pricing power, enterprise NVMe SSDs for AI data centers are seeing similar supply tightness and improved pricing. The 'AI PC tailwinds' you mentioned are also boosting WDC's client SSD business. What's interesting is that while HBM gets all the attention (rightfully so - those margins are incredible), the NAND recovery is more subtle but equally real. Western Digital's pivot toward higher-margin enterprise products mirrors Micron's strategy of focusing on premium segments. Both companies are benefiting from the shift away from commoditized memory toward specialized, high-performance solutons for AI workloads. The memory up-cycle you described is lifting all boats, but the NAND side doesn't get nearly the same coverage despite being just as critical to AI infrastructure.