🧠 GPT-5: Who's Impacted?

The public-market read-through from AI’s next leap

Welcome to the Free edition of How They Make Money.

Over 200,000 subscribers turn to us for business and investment insights.

In case you missed it:

GPT-5 has become the default in ChatGPT.

OpenAI CEO Sam Altman says it’s like having a “team of PhD-level experts,” though it’s still not hallucination-proof.

This isn’t a GPT-4-style leap so much as a product gear shift. The upgrades are subtle: performance and cost optimization over headline features.

GPT-5 doesn’t dominate the benchmarks as clearly as expected, which points to a world where models are becoming commoditized. That tilts the game to pricing and distribution, not bragging rights.

As usage rises, who gets paid? Silicon, cloud, platforms, or apps?

Let’s map where the money goes and the KPIs to watch.

Today at a glance:

🧠 GPT-5 is here

🏗️ AI stack economics

☁️ CoreWeave readout

🧭 The investor lens

🧠 GPT-5 is here

What happened?

GPT-5 had an Apple-esque launch: High polish, tight demos, chart crimes, and a few typos. But the message was clear: faster, more capable, and better at deciding when to think longer.

The quick facts:

Modes: Auto-switches between chat and “Thinking” for harder tasks.

Context: Up to 400k tokens in the API (with an output cap).

Focus: Better reasoning/coding, fewer hallucinations, more reliable responses.

Access: Available across ChatGPT tiers. Paid tiers keep a model picker.

Scale: ~700 million people use ChatGPT weekly, so default changes matter.

Below is the full OpenAI keynote if you missed it.

How good is it?

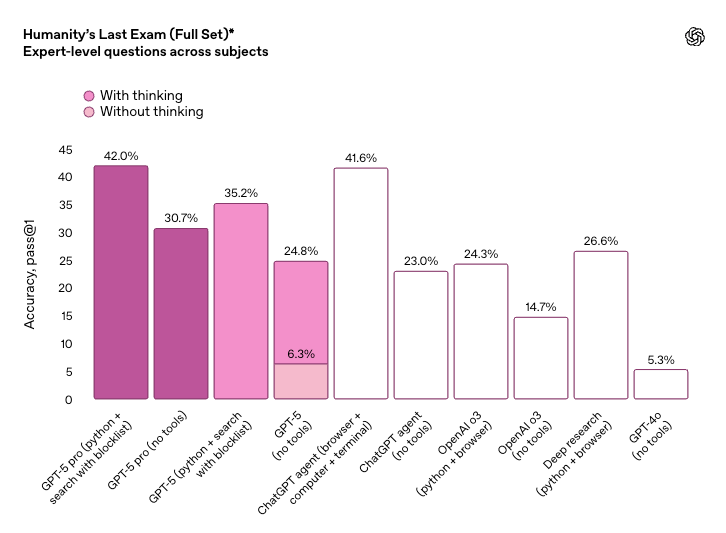

Humanity’s Last Exam is a 2,500-question benchmark from CAIS and Scale AI that tests expert-level reasoning across disciplines. OpenAI’s materials show GPT-5 Pro scoring 42%, near the top of reported runs. xAI’s Grok 4 Heavy (with tools) is cited around 44%. Because results depend heavily on tool use and setup, treat comparisons as directional.

Taken together, these results put GPT-5 among the leaders but not clearly ahead. Unlike GPT-4’s 2023 debut, it doesn’t crush the benchmarks.

Why it matters.

If we look beyond the noise around the initial release, GPT-5 is undoubtedly a smarter model. A smarter default lowers friction. Lower friction usually drives more usage, and rising usage tends to show up in three places:

Apps monetize through seats and overage.

Clouds monetize through GPU hours and long-term commitments.

Chip suppliers monetize through capacity builds.

In the near term, the scoreboard that matters isn’t a benchmark table. It’s all about reliability, latency, and delivered cost per task. Those three KPIs determine who expands margins as shiny new model adoption spreads.

🏗️ AI stack economics

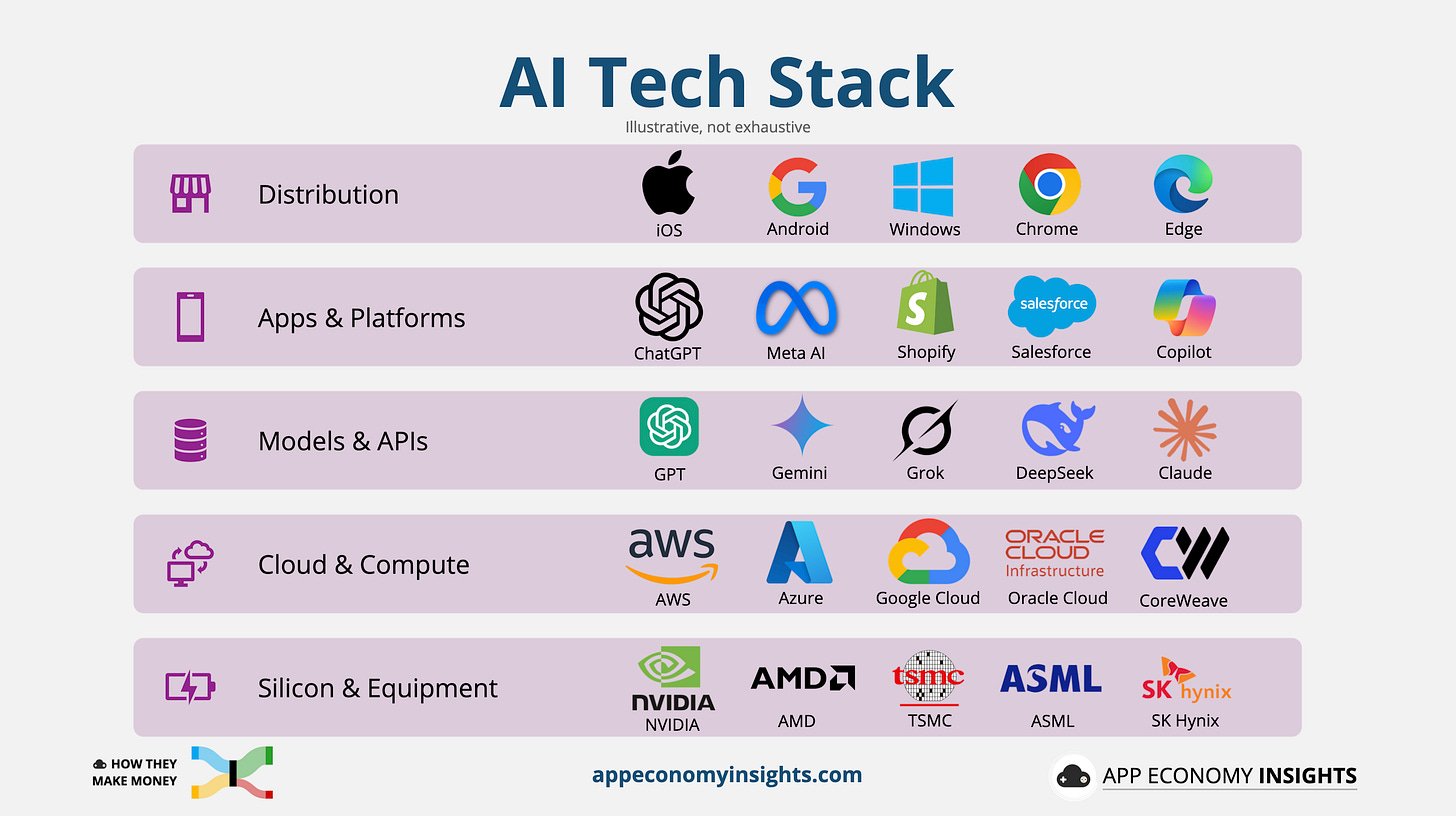

In the AI cycle, dollars flow bottom-up:

Revenue recognition hits silicon on shipment,

then clouds as utilization catches up,

then software once AI features actually monetize.

In a mature stack, a usage bump hits cloud/model API spend first. Silicon moves only when utilization stays high enough to trigger capacity adds. Apps depend entirely on pricing design (seat, usage, or outcome).

How a new dollar travels

A customer pays for features or outcomes in an app or platform. That app calls a model API or a hosted model. The request runs on cloud GPUs/NPUs. Sustained demand drives capacity additions at chipmakers and equipment suppliers. One user action, many ledgers.

📱 Apps & platforms

What changes: Engagement will increase with GPT-5 as the new default and more model releases from competitors on the horizon. Software monetization depends on pricing design (seat add-ons, usage overage, outcome fees). Speed and consistency unlock broader rollouts, while flaky UX stalls them.

P&L (where it shows):

Revenue: AI tiers, usage overage, outcome/workflow pricing, bundles.

COGS: inference (API or self-host), eval/guardrails.

Margin levers: packaging/price tests, orchestration efficiency, partial on-device.

Who’s impacted (examples): ADBE · CRM · NOW · INTU · SHOP · DUOL.

What to watch: AI products attach %, ARPU lift, $/task trend, net retention, RPO.

🧠 Models & APIs

What changes: Newer models mean higher call volume from default routing, increasing enterprise demand for private deploys/fine-tunes, as reliability and latency drive stickiness.

P&L (where it shows):

Revenue: per-token/compute usage, fine-tuning, private tenancy, eval/guardrail add-ons.

COGS: serving infra, safety layers, data/licensing.

Margin levers: batching, quantization, caching, tokenizer/context tricks.

Who’s impacted (examples): GOOG (Gemini) · META (Llama hosting) · MSFT (Azure OpenAI) · IBM (watsonx) · BIDU (ERNIE) · and private companies like xAI (Grok) · Anthopic (Claude).

What to watch: effective $/1k tokens, latency/uptime, enterprise mix, net retention.

☁️ Cloud & compute

What changes: More GPU hours, longer commitments; better depreciation leverage as utilization rises. Energy/networking can offset gains.

P&L (where it shows):

Revenue: AI instances, GPU hours, reserved capacity; software attach.

COGS: depreciation from prior capex, power/networking.

Margin levers: utilization, scheduling, DC efficiency, software attach.

Who’s impacted (examples): MSFT · AMZN · GOOGL · ORCL · CRWV

What to watch: utilization %, backlog/commitments, GPU-hours growth, gross-margin bridge (utilization vs energy).

⚙ Silicon & equipment

What changes: If elevated utilization persists, capacity adds follow (accels, HBM, networking, tools). Mix shifts (training ↔ inference SKUs) matter for margin.

P&L (where it shows):

Revenue: accelerators/networking; wafers; EUV/High-NA tools.

COGS/Cash: yields, inventory cycles; prebuys/long-lead deposits.

Margin levers: high-end mix, software/SDK attach, supply tightness.

Who’s impacted (examples): NVDA · AMD · AVGO · TSM · ASML

What to watch: Date Center revenue mix, lead times, backlog duration/quality, gross-margin trajectory.

Training vs. inference (different economics)

🎓 Training hits cash first (capex at clouds), then flows into depreciation inside COGS over time. It pulls forward revenue for silicon and equipment.

🤖 Inference is an ongoing variable cost for apps/platforms (or a pass-through if priced per token). Unit cost falls with better batching, quantization, caching, and partial on-device execution. Reliability and latency shape what you can charge.

What matters next: For software companies, watch AI revenue call-outs during earnings. For cloud, watch utilization and GM bridges. For chips, watch lead times and backlog. Elsewhere, watch and $/task trend. Those tell you who’s keeping the surplus as usage scales with new models like GPT-5.

☁️ CoreWeave readout

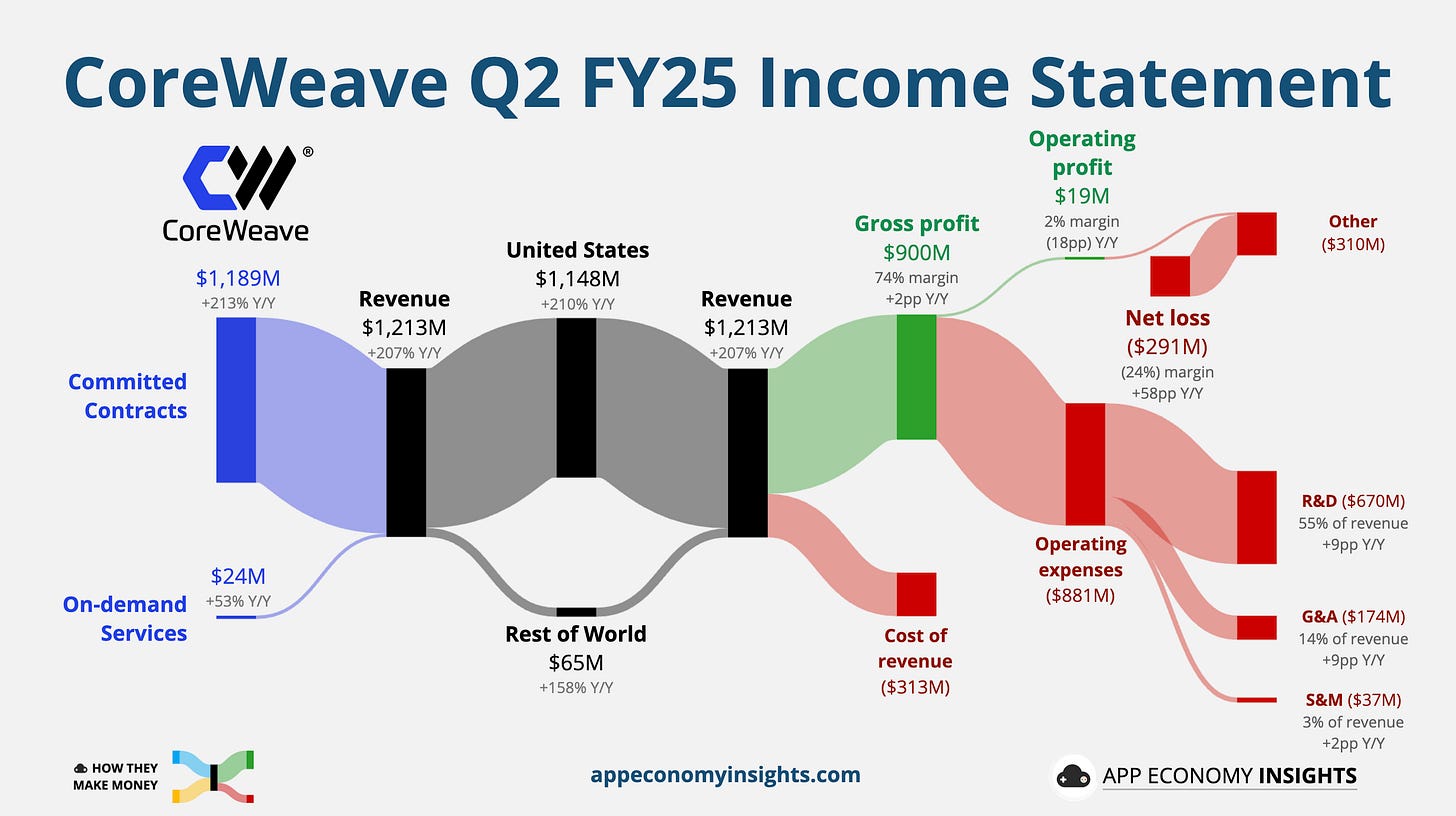

CoreWeave’s quarter had two truths at once:

Demand is roaring, profits aren’t

Revenue surged 3x Y/Y to $1.21 billion ($130 million beat). The company had a small operating profit, but interest expense of nearly $0.3 billion on $11 billion of debt weighed on results. The loss per share (-$0.60) came in wider than expected, causing the stock to drop.

The order book is getting heavier. Management flagged a $30.1 billion revenue backlog (+16% Q/Q), and reiterated the storyline you’re hearing across AI infra: demand continues to outstrip supply. They notably added a $4 billion extension from OpenAI.

That shows up in guidance too: FY25 revenue was raised by $250 million to $5.15–$5.35 billion. The market didn’t celebrate (the new demand from OpenAI was expected), but the forward signals are positive.

Buying time with M&A and product

CoreWeave closed the ~$1.7 billion acquisition of Weights & Biases, folding model monitoring and developer workflows into the platform. Management has been touting early Blackwell availability at scale, useful talking points when customers want both capacity and a cleaner ops experience. Meanwhile, the pending $9 billion Core Scientific deal is a straight capacity and power play: more sites, more megawatts, faster.

For now, operating expenses are outrunning revenue. But if backlog keeps building and customers keep shifting inference to specialized GPU clouds, CoreWeave remains a clean demand proxy for silicon (NVDA et al.) and a use-hours proxy for the broader cloud cycle.

What to watch next: Utilization and booked capacity (does backlog convert?), any color on pricing as Blackwell ramps, and whether the W&B integration surfaces higher-margin software attach. If those move the right way, the earnings math gets easier, long before a new data center comes online.

🧭 The investor lens

Benchmarks about the latest “best models” make headlines, but economics move stocks. Over the next 3 to 5 years, the winners are the companies that turn their models into durable revenue and expanding margins and can show it in the numbers, not the demos.

What matters most

Distribution power. Defaults, OS/browser/device placement, and enterprise bundling decide adoption speed. A great model without distribution looks like a feature. With distribution, it looks like a standard.

The cost curve. Watch the delivered cost per task. Routing, batching, quantization, caching, and partial on-device execution push it down. Energy and networking push it up. Margin accrues to whoever bends this curve the fastest.

Reliability and latency. These unlock outcome pricing, agentic workflows, and renewal quality. If responses are slow or inconsistent, customers cap usage. Benchmarks don’t matter if the product doesn’t feel fast and dependable.

Disclosure quality. Clear KPI breakouts (utilization, attach, commitments, $/task trend) deserve a valuation premium. Vague roll-ups don’t.

Two swing factors

Capacity cycle. If supply stays tight, pricing power and utilization support margins. But there will be a point when supply outruns demand (eventually).

Regulatory & licensing drag. Content deals, provenance audits, and safety/usage rules can shift who pays for what (and when).

What to watch each quarter

Attach & ARPU: Are AI tiers creating real uplift without raising churn?

Utilization & commitments: Are GPU hours rising and contract durations extending?

Gross-margin trend: Is depreciation leverage outpacing energy/inference costs?

Lead times & backlog quality: Are orders firming or slipping?

On-device share: Any visible shift of inference away from the cloud? Are more devices shipped with a capable Neural Processing Unit?

We’ll track these KPIs in our earnings coverage in the coming quarters and show them in clean visuals. As software, cloud, and silicon leaders report their performance, you’ll see where the dollars moved.

That’s How They Make Money.

That’s it for today!

Stay healthy and invest on.

Want to sponsor this newsletter? Get in touch here.

Disclosure: I own AAPL, ADBE, AMD, AMZN, ASML, CRM, DUOL, GOOG, INTU, META, NVDA, SHOP, and TSM in App Economy Portfolio, our investing service, where we identify and accumulate shares of exceptional companies—from fast-growing disruptors to proven cash machines.

If thoughtful, long-term investing resonates with you, join our investing community and get instant access to our curated stock portfolio.

Author's Note (Bertrand here 👋🏼): The views and opinions expressed in this newsletter are solely my own and should not be considered financial advice or the views of any other organization.